Crawl Budget – What It Is, Why It Matters & How to Improve It

When you work on SEO, there’s one technical seo concept that often gets neglected but can make a big difference for larger sites: crawl budget. In this article I’ll explain what it is, show you examples, and give you simple steps to optimize it.

What Is Crawl Budget?

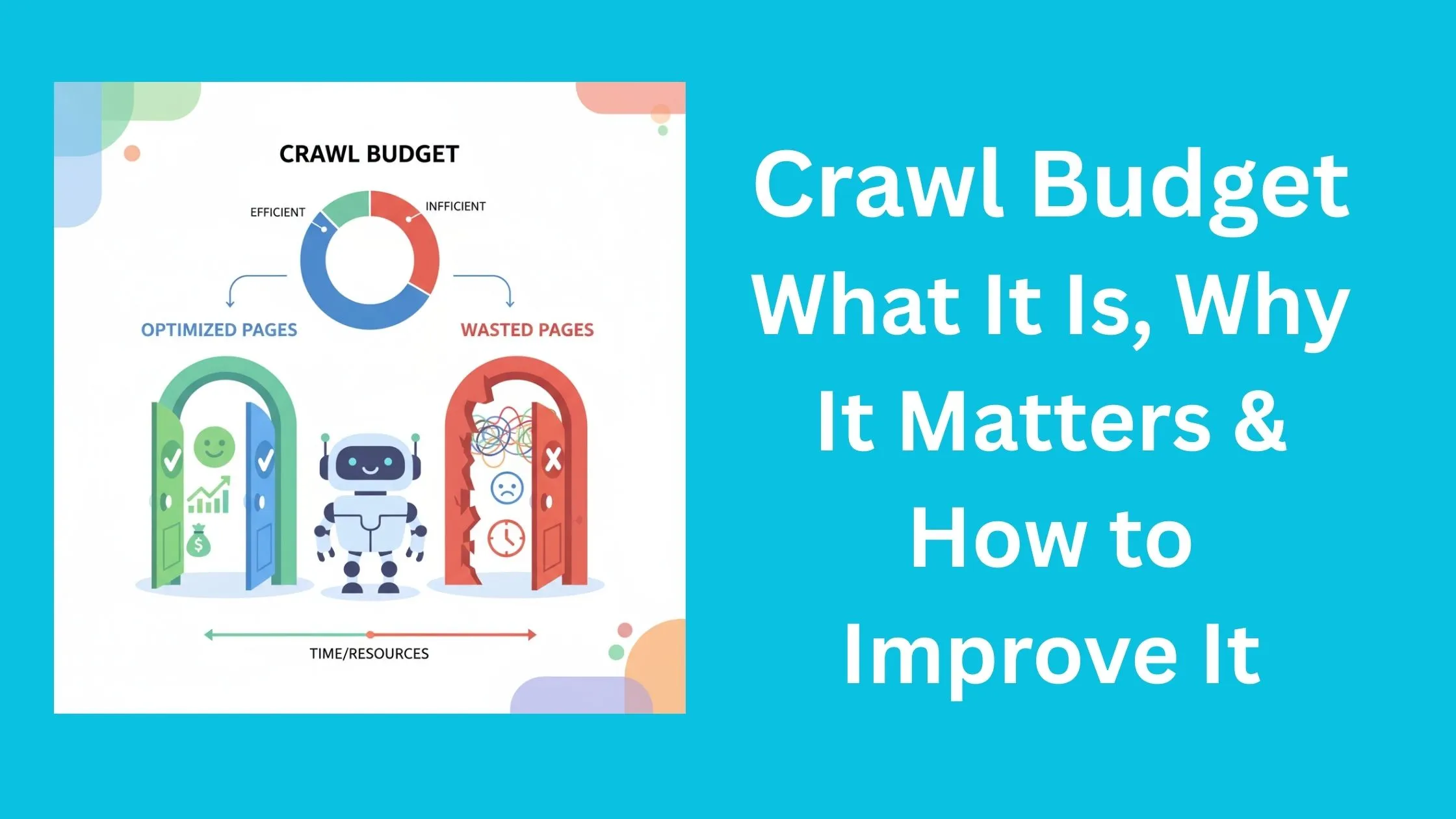

Crawl budget is how many pages (or other documents) search engines will try to crawl on your website in a certain period of time.

It’s determined by two main things:

- Crawl limit (or host load): How many requests your server can handle without slowing down or failing. If your site is slow, frequently erroring, or overloaded, search engines will crawl fewer pages.

- Crawl demand (or crawl scheduling): How much the search engine wants to crawl your pages. If certain pages are very popular, updated often, have many links, etc., they will be crawled more frequently.

Also, “pages” here means more than just HTML pages: CSS/JS files, PDFs, alternate versions of a page (mobile, desktop), etc. are also part of what gets crawled.

Why You Should Care About Crawl Budget

If your crawl budget is poorly used, search engines may waste time crawling parts of your site that are useless or unimportant. That means:

- Important new or updated content might take longer to be discovered/indexed.

- Some pages might never be crawled or get low visibility in search results.

- Your SEO improvements may not show up quickly or fully.

If your website is large (e.g. tens of thousands of pages), or updated often, optimizing crawl budget becomes especially important. For smaller sites, many crawl budget issues might not matter so much—but optimizing is still good practice.

Real‐World Example

Let’s say you run an e‑commerce website that sells shoes, bags, and accessories. You have:

- Main category pages (Shoes, Bags, Accessories)

- Product pages (50,000 items)

- Filter/sort pages (e.g. “Shoes > Boots > Color = Black”, /size, /price, etc.)

- Tag pages, internal search pages

- Some blog posts

What might happen:

- Because your product filters generate very many combinations (color, size, price), you might have thousands of filter URLs that essentially show very similar content, or infinite combinations. If search engines crawl many of these, most are not useful.

- If your server is slow, then Googlebot crawls fewer of your product pages per day. If a product page is updated (e.g. price, stock, description), it might take longer to get re‐crawled.

- If many pages are non‐indexable, or duplicates (e.g. same product reachable by multiple URLs), those also waste crawl budget.

How Do You Know What Your Crawl Budget Is?

Here are two ways:

- Google Search Console — Crawl Stats:

You can see how many pages Google is crawling per day. Multiply by number of days to get a rough monthly crawl capacity. - Server logs:

Looking at your server logs, see how often crawlers like Googlebot are hitting various URLs. Compare what is being reported in Search Console vs. what the logs show.

Example:

- If GSC says Google crawls ~200 pages/day → ~6,000 pages/month.

- If you have 50,000 product pages, only a fraction are being crawled.

Common Problems That Waste Crawl Budget

These are things that often make search engines spend time on “bad” URLs instead of your important pages:

- URLs with parameters (filters/sorts) that generate many versions of nearly the same content.

- Duplicate content (content that is the same or almost the same, reached via different URLs).

- Low‑quality pages (thin content, little value).

- Broken links or redirect chains.

- Including URLs in XML sitemap that are not indexable or shouldn’t be counted.

- Pages that load slowly or frequently time out.

- Having many “non‑indexable” pages visible.

- Poor internal link structure, so important pages are “buried” and hard for crawlers to reach.

How to Optimize / Improve Crawl Budget

Here are practical steps, with examples:

| Action | What to Do | Example |

|---|---|---|

| Limit parameter URLs | Prevent search engines from crawling/filter‑URLs that make clones. Use robots.txt, URL parameter tools (in GSC/Bing), or use nofollow on parameter links. | If your site has /products?color=blue&size=7, avoid allowing those to be crawled if they produce near‑duplicate product listings. |

| Prevent or clean up duplicate content | Use canonical tags, redirect duplicate pages, avoid separate pages that add little difference. | If “shoes/boots” and “boots” both exist and show same content, redirect one to the other or canonicalize. |

| Fix broken/redirect links | Remove or correct broken links; avoid long redirect chains. | Instead of page A → redirect → redirect → page B, do page A → page B directly. |

| Ensure XML sitemap is clean | Only include pages you want indexed; avoid non‑indexable, error pages, redirects. Split large sitemaps by section to see which area has many unindexed pages. | E.g. have sitemap for blog/ section, product/ section—then check which sitemap has many pages not indexed. |

| Improve page load times | Faster server, optimized images, caching, etc. Pages that load fast allow crawlers to fetch more per unit time. | Reduce page load from 3s to 1s, then Googlebot might crawl many more pages per day. |

| Reduce non-indexable content | Make sure pages which are noindex, or canonical to others, or server error pages, are not linked heavily or included in sitemap. | If you have admin, internal pages, or user profile pages that you don’t want in search, make sure they are noindex / blocked / removed from sitemap. |

| Better internal linking | Ensure important pages are linked from many places; structure site so that crawlers can reach deeply nested pages. | If you have an important blog post from 2018 that still gets traffic, link to it from newer articles so it is visited more often. |

How to Increase Your Crawl Budget (if Needed)

If after optimization you still need more crawl capacity (for instance, because your site keeps growing), you can improve your site’s overall authority:

- Get more external backlinks (other sites linking to you). Sites with higher “authority” tend to be crawled more frequently.

- Maintain good site health (few errors, high performance).

- Keep content fresh and updated, so that demand to crawl increases.

Summary & Quick Checklist

- What it is: How many URLs search engines will crawl over time (based on limit + demand).

- Why important: Because wasted crawl = search engine visiting unimportant or duplicate parts instead of your main content → can hurt how quickly and how well important pages get indexed and rank.

- Main fixes:

- Reduce duplicate & parameter URLs.

- Clean XML sitemaps.

- Fix broken / redirect chains.

- Improve load speed.

- Improve internal link structure.

If your site is under ~10,000 pages, crawl budget isn’t usually something to worry too much about—but following the best practices still helps. For larger sites, it’s often essential.

With 5+ years of SEO experience, I’m passionate about helping others boost their online presence. I share actionable SEO tips for everyone—from beginners to experts.